About me

Hello there, I am a first-year Ph.D. student in Computer Science at the University of Illinois Urbana-Champaign under supervision of Professor Tong Zhang. I obtained a BSc degree in Data Science and Technology (DSCT) with a double major in Computer Science from the School of Science at the Hong Kong University of Science and Technology (HKUST).

During my undergraduate years, I worked with Prof. Raymond Chi-Wing WONG in Data Mining; Prof. Mrinmaya Sachan in Natural Language Processing (NLP); Prof. Chi-Keung TANG and Prof. Yu-Wing TAI in Computer Vision; Prof. Tong Zhang in Lean4 (formal mathematics).

My ultimate research goal is to make machine learning systems have compatible abilities with humans (of course, everybody wants that); in specific, my research pathway can be formulated as the X2Y system; currently, I am focusing on how to use formal language like Lean to formulate underlying logic of natural language generation.

Education

- Ph.D in Computer Science, University of Illinois Urbana-Champaign, 2025-now

- Ph.D. Student in Computer Science, University of Wisconsin-Madison, 2024-2025 (transferred to UIUC)

- BSc in Data Science and Technology, double major in Computer Science, Hong Kong University of Science and Technology (HKUST), 2020-2024

- Exchange student at ETH Zurich

Publication

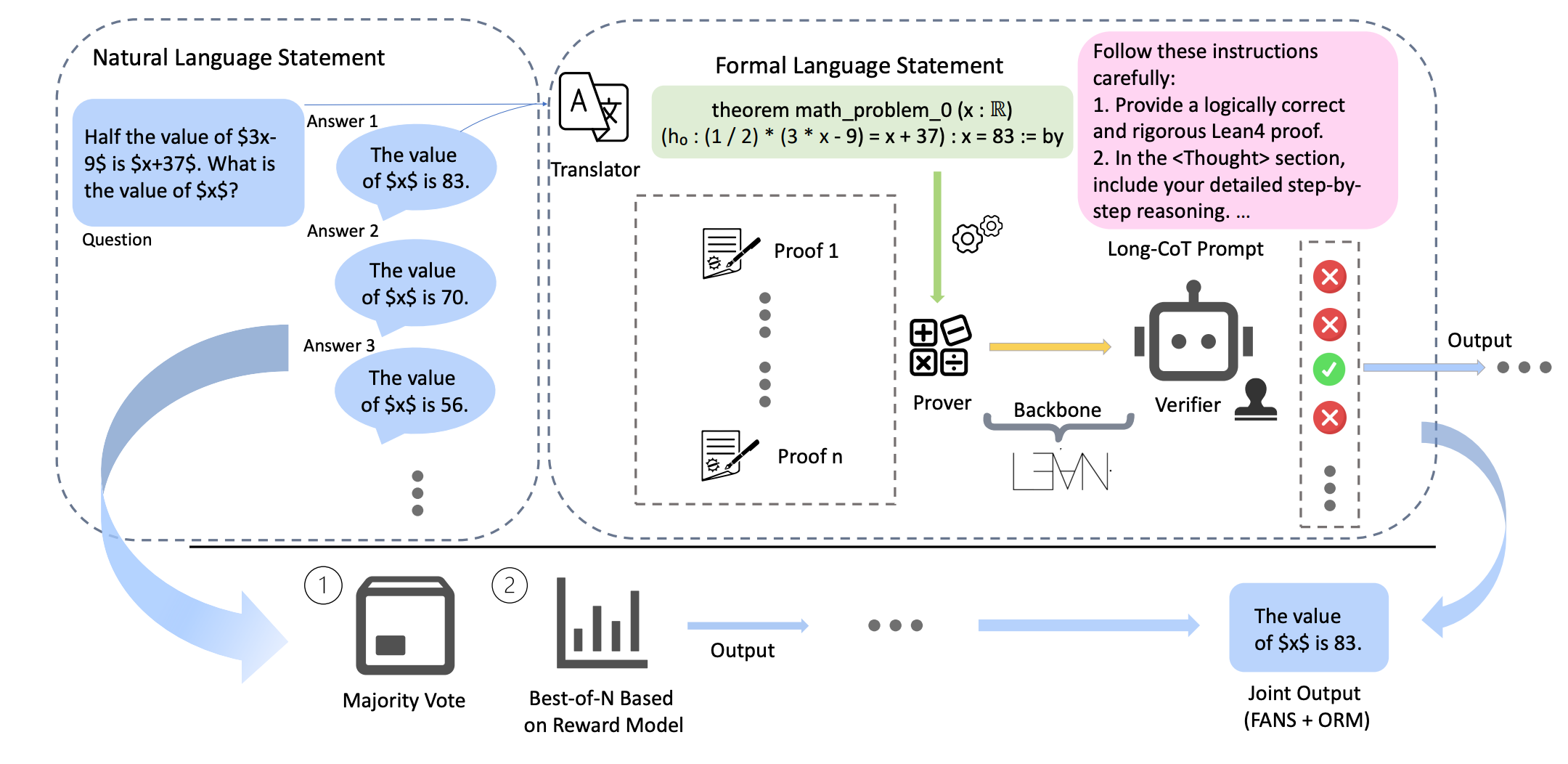

[EMNLP 2025 (Main)] Let’s Reason Formally: Natural-Formal Hybrid Reasoning Enhances LLM’s Math Capability]

Ruida Wang*, Yuxin Li*, Yi R. (May) Fung, Tong Zhang (* indicates first authors)

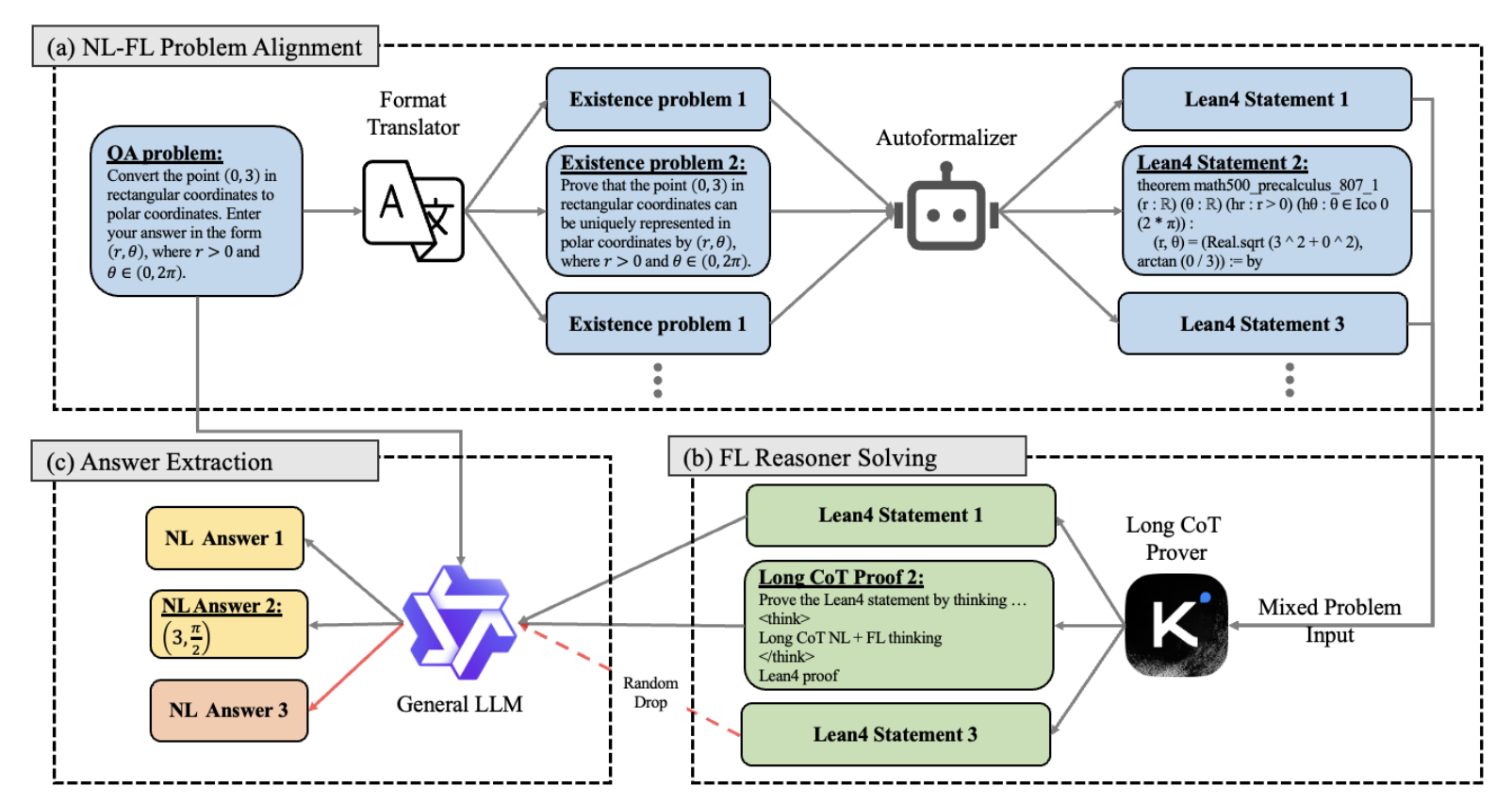

[EMNLP 2025 (Main)] FANS: Formal Answer Selection for LLM Natural Language Math Reasoning Using Lean4

Jiarui Yao*, Ruida Wang*, Tong Zhang (* indicates first authors)

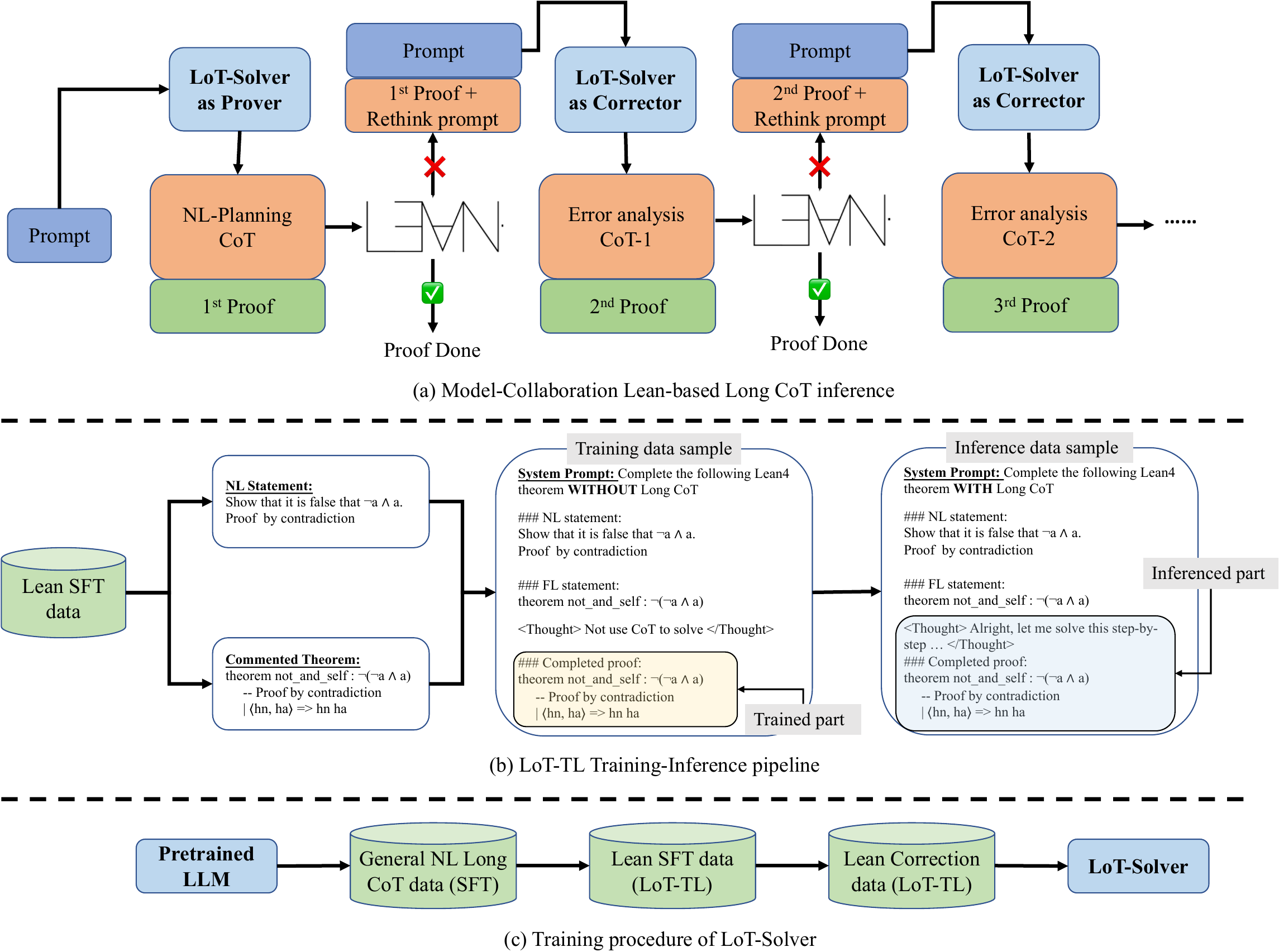

[ICML 2025] MA-LoT: Model-Collaboration Lean-based Long Chain-of-Thought Reasoning enhances Formal Theorem Proving

Ruida Wang*, Rui Pan*, Yuxin Li*, Jipeng Zhang, Yizhen Jia, Shizhe Diao, Renjie Pi, Junjie Hu, Tong Zhang (* indicates first authors)

[Website] [Paper] [Github] [Model Ckpt]

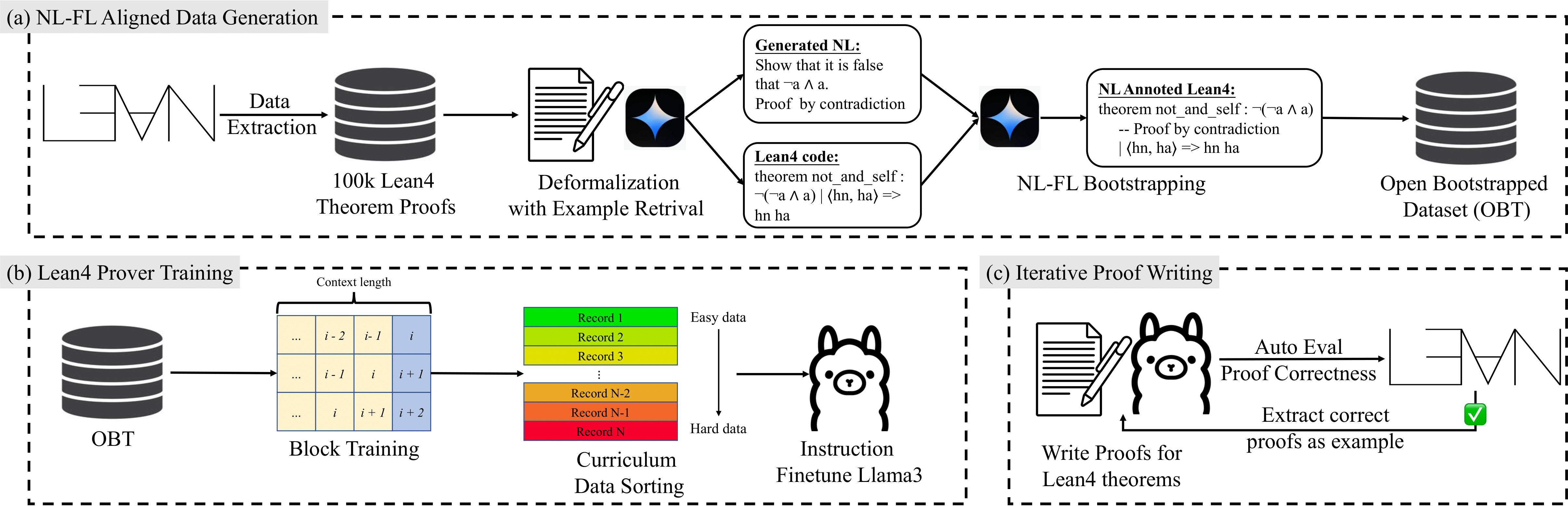

[EMNLP 2024 (Main)] TheoremLlama: Transforming General-Purpose LLMs into Lean4 Experts

Ruida Wang*, Jipeng Zhang*, Yizhen Jia*, Rui Pan, Shizhe Diao, Renjie Pi, Tong Zhang (* indicates first authors)

[Paper] [Github] [Model Ckpt] [OBT Dataset]

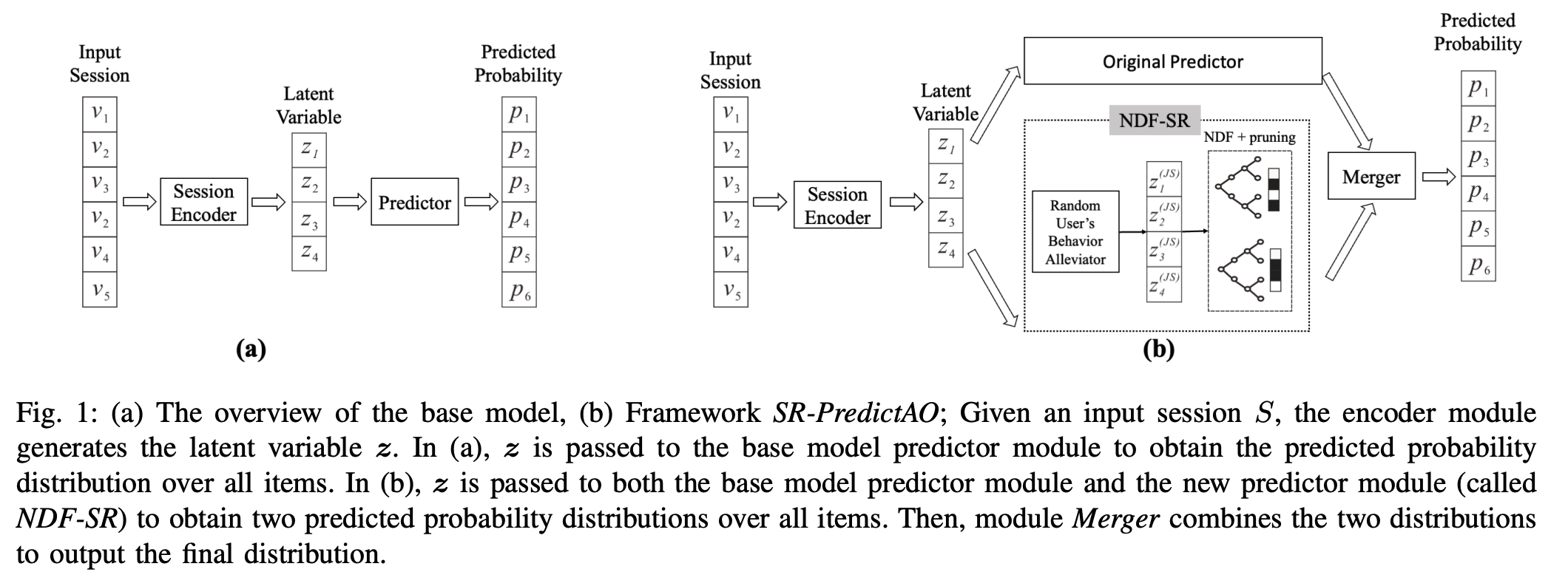

[ICDM 2024] SR-PredictAO: Session-based Recommendation with High-Capability Predictor Add-On

Ruida Wang, Raymong Chi-Wing Wong, Weile Tan

[ECCV 2024] DragVideo: Interactive Drag-style Video Editing

Yufan Deng*, Ruida Wang *, Yuhao Zhang*, Yu-Wing Tai, Chi-Keung Tang (* indicates equal contributions)

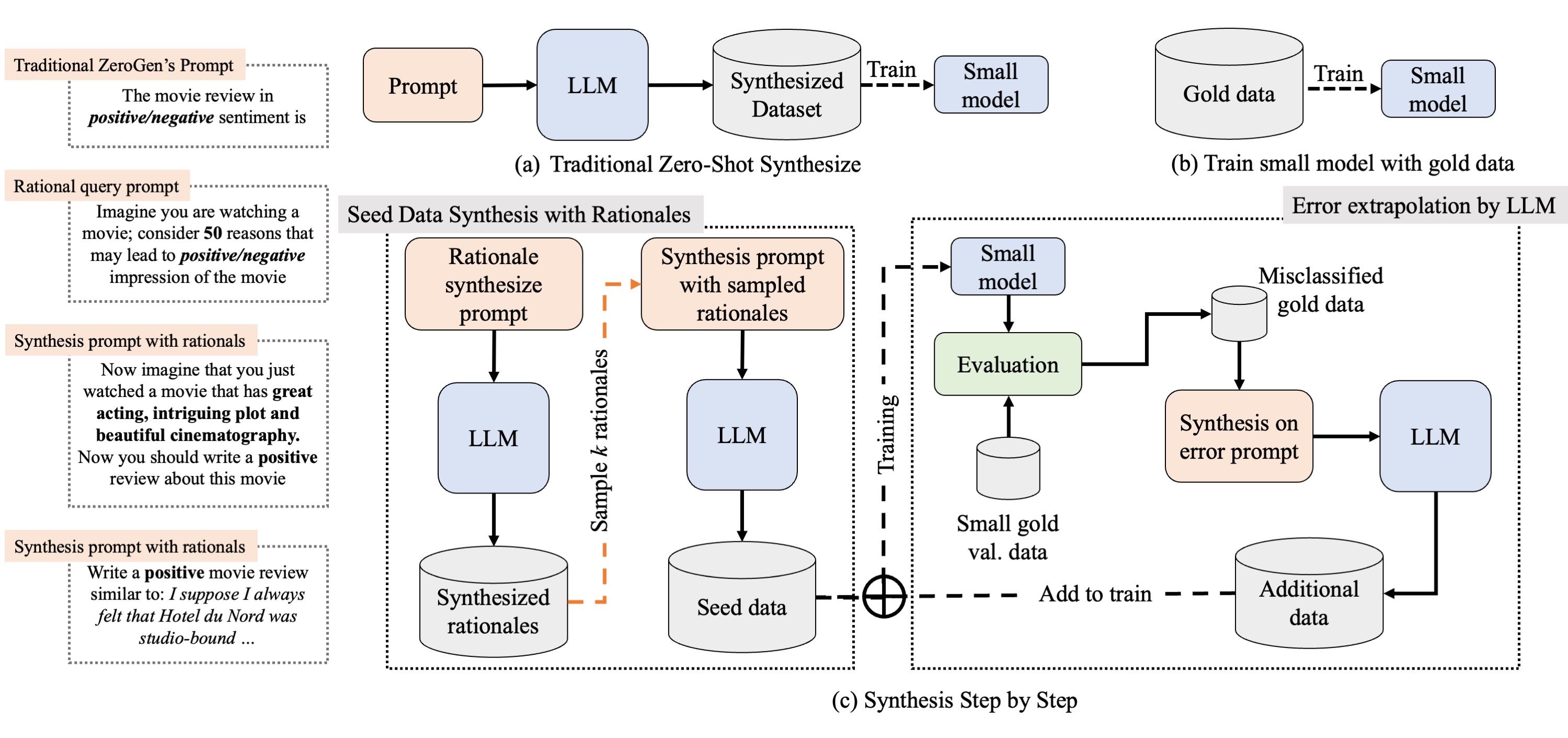

[EMNLP 2023 (Findings)] Let’s Synthesize Step by Step: Iterative Dataset Synthesis with Large Language Models by Extrapolating Errors from Small Models

Ruida Wang, Wangchunshu Zhou, Mrinmaya Sachan